Parallelization

Parallelization in Yambo by Yambo Team

Fermi supercomputer @ CINECA HPC center.

Fermi supercomputer @ CINECA HPC center.

Introduction

In this tutorial we will see how to setup the variables governing the parallel execution of yambo in order to perform efficient calculations in terms of both cpu timing and memory usage. As a test case we will consider the trans-polyacetylene (TPA) polymer, a quasi-one dimensinal system. Beacuase of its reduced dimensionality, GW calculations turns out to be very delicate. Beside the usual convergence studies with respect to k-points and sums-over-bands, in nanostructures a sensible amount of vacuum is required in order to treat the system as isolated, translating into a large number of plane-waves. As for other tutorials, it is important to stress that this tutorial it is meant to illustrate the functionality of the key variables and to run in reasonable time, so it has not the purpose to reach the desired accuracy to reproduce experimental results. Moreover please also note that scaling performance illustrated below may be significantly dependent on the underlying parallel architecture. Nevertheless, general considerations are tentatively drawn in discussing the results. File:Tpa.png Trans-Polyacetylene.

The material

- Trans-Polyacetylene

- Supercell 30.0 x 30.0 x 4.667 [a.u.]

- Plane waves cutoff 40 Ry (equal to 117581 [[../../doc/doc_conventions.php|RL]] vectors)

TPA is an organic polymer with the repeating unit (C2H2)n. It is an important polymer, as the discovery of polyacetylene and its high conductivity upon doping helped to launch the field of organic conductive polymers. It consists of a long chain of carbon atoms with alternating single and double bonds, each connected to one hydrogen atom to complete the spC2 connectivity. Because of its technological relevance TPA has been the subject of several studies also in the framework of many-body perturbation theory [2-4].

In the following we will describe a GW calculation on TPA and will play with the input variables governing the Yambo parallelism, which is based on a hybrid MPI (Message Passing Interface) plus share-memory OpenMP (Open Multi-Processing) strategy. Yambo implements several levels of MPI parallelism (up to 4, depending on the specific runlevel chosen) running over physical indexes such as q, k point meshes, sums over bands, valence-conduction transitions etc. Moreover, OpenMP is mostly used to deal with plane waves and real space grids. For each example you can find a link to the Input file with a brief description of the most relevant variables. Moreover, we will also use this system to discuss in detail some technical aspects of Yambo.

[01] Initialization: 01_init (yambo -i -V RL)

This section describes the standard [[../../theory/docs/doc_INI.php|initialization]] procedure. As this initialization is common to all yambo runs.

To run this example enter the YAMBO directory (where you have previously created the SAVE folder). Before running the initialization procedure let's have a look into the database produced by the yambo interfaces. In order to do that type:

localhost:>yambo -D

and you will see

[RD./SAVE//ns.db1]------------------------------------------ Bands : 50 K-points : 16 G-vectors [RL space]: 117581 Components [wavefunctions]: 14813 Symmetries [spatial+T-rev]: 4 Spinor components : 1 Spin polarizations : 1 Temperature [ev]: 0.000000 Electrons : 10.00000 WF G-vectors : 19117 Max atoms/species : 2 No. of atom species : 2 Magnetic symmetries : no

This is the header of the electronic structure database. It is interesting to note the large number of plane waves used to describe the system, together with, at variance with purely molecular systems, a k-point sampling of the Brillouin zone (BZ) which is needed because of the polymer periodicity. The number of plane-waves increases with the supercell size (i.e. the amount of vaccum) in the direction orthogonal to the polymer axis. Nevertheless, the need to handle such a large number of plane waves can be relaxed when performing MBPT calculations (may not be strictly required to achieve reasonable accuracy). For instance, the number of plane waves can be reduced by typing:

yambo -i -V RL

and setting the [[../../input_file/vars/var_MaxGvecs.php|MaxGvecs]] variable to a lower value (for instances 80000 RL). Note that this operation will limit the maximum number of wave-vector than can be used in all the calculations, and such approximation have to be carefully tested.

If now you open the r-01_setup you will find a lot of useful ([[File:../fantastic_dimensions/lif/smile.gif|60x23px]]) information about your system, like a short summary of the electronic structure previously calculated at DFT level, and some details about atomic structure and the cell, such as the Direct and Indirect Band Gaps as calculated by quantum espresso at LDA level.

X BZ K-points : 1

Fermi Level [ev]: -4.167134

VBM / CBm [ev]: 0.000000 0.986973

Electronic Temp. [ev K]: 0.00 0.00

Bosonic Temp. [ev K]: 0.00 0.00

El. density [cm-3]: 0.161E+23

States summary : Full Metallic Empty

0001-0005 0006-0050

Indirect Gaps [ev]: 0.986973 5.091947

Direct Gaps [ev]: 0.986973 8.728394

X BZ K-points : 30

[02-01] Pure MPI Parallelization (yambo -p p -k Hartree -g n)

Now we will perform several GW calculations focusing on the performance of different parallelization layers/levels. In particular we will look at the cpu-time, memory usage, and load balancing resulting from each strategy.

X_all_q_ROLEs= "q k c v" # [PARALLEL] CPUs roles (q,k,c,v) X_all_q_CPU= "8 1 1 1" # Parallelism over q points only SE_ROLEs= "q qp b" SE_CPU= "8 1 1" # Parallilism over q points only

or

X_all_q_CPU= "1 8 1 1" # Parallelism over k points only SE_CPU= "1 8 1" # Parallelism over QP states

or

X_all_q_CPU= "1 1 4 2" # Parallelism over conduction valence bands SE_CPU= "1 1 8" # Parallelism over G bands

A template input file can be found in ./Inputs/02_QP_PPA_pure-mpi where all the other calculation parameters have already been set (beware underconverged values). The files corresponding to the above calculations are

02_QP_PPA_pure-mpi-q 02_QP_PPA_pure-mpi-k 02_QP_PPA_pure-mpi-cvb

To run these examples type

localhost:> mpiexec -n 8 yambo -F Inputs/02_QP_PPA_pure-mpi-q -J 02_QP_PPA_pure-mpi-q

and similarly for the other inputs (of course the use of mpiexec rather than mpirun or other mpi launcher as well as the number of tasks used, 8 here, is reported as an illustration). The optional -J flag is used to label the output/report/log files, extremely useful when a series of calculations needs to be performed, as when running convergence tests (see [[../../doc/command_line_options.php|here]] for the command line synopsis).

In the first example the parallel structure is set in order to distribute the q vectors over MPI tasks. In the calculations of the dielctric screening (Xs or Xp) these are totally independent tasks, also resulting in a very good load balance. The can be seen by inspecting the LOG files by typing:

localhost:> grep "PARALLEL Response" ./LOG/l-02_QP_PPA_pure-mpi-q*

showing the load distribution. The situation for the Self Energy is different for two reasons:

- The calculation of the Self Energy implies a sum over q points (integration over the Brillouin zone), which q is a good quantum number for the response function X.

- The computational effort associated to q points of the IBZ is not constant because each q point is expanded in a different number of symmetry equivalent points when performing the sums over the entire BZ. As a result, q vectors equivalent only to themselves (such as Gamma) give rise to contributions faster to evaluate than those from q with many equivalents.

It is important to keep in mind that load unbalance can result in a non-negligible time in communication operation. This can be seen in the report file by comparing the communication time spent in Xo (REDUX), which is zero in this case, with that given by GW(REDUX).

Regarding memory (one of the main bottle-necks when performming realistic calculations), q points are distributed perfectly over X, but each MPI task needs to load all the WFS. In other words, while the q parallelism is very efficient wrt communication, it does not scale the memory allocated by each task (CPU). This can be inspected by checking the amount of memory allocated for the WFs as well as max allocated memory from the log files. This can be done by typing:

localhost:> grep "Gb" ./LOG/l-02_QP_PPA_pure-mpi-q*

This example shows that to run this calculation a total amount of 0.102 GB is required (while this looks harmless, when deadling with realistic systems this is one critical parameter to control).

When running the input ./INPUT/02_QP_PPA_pure-mpi-k, tasks are distributied over k points for X and quasi particle energies for GW PPA. In this case the situation is reversed with respect to the case illustrated above, since a communication among the k consitrubutions for the calculation of X is required, while the quasi particle corrections for the k points requested in input are independent. This can be seen by looking at the REDUX time for X and GW in the report file. Regarding the memory, the situation is unchanged with respect to the previous case (no memory distribution for the wave functions). Finally when running:

localhost:> mpiexec -n 8 yambo -F Inputs/02_QP_PPA_pure-mpi-cvb -J 02_QP_PPA_pure-mpi-cvb

now we have introduced a parallelization over the bands entering the sum-over-states needed to build Xo and the GW-PPA self energy. Clearly in this case we cannot avoid communication among processors, while the memory amount will be distributed among them. In this particular case, by typying:

localhost:> grep "Gb" ./LOG/l-02_QP_PPA_pure-mpi-cv*

we recognize that the memory amount for processor is reduced to 0.037 Gb, compared to 0.102 Gb we had before.

From these examples we have seen that the different parallelization strategies are not equivalent. Which stategy is best? Of course the answer depends on the specific features of the system studied and on the available machine. Generally speaking, q-parallelism is often found to increase memory usage and slow down calculations because of load unbalance. Instead, while more communication is involved, c- v-, b- parallelisms distribute memory evenly and do not lead to significan communication overheads.

Finally in order to take advantages of the different parallelization level, it is possible to combine them. This is shown in

Inputs/02_QP_PPA_pure-mpi-comb

where we ave assigned:

X_all_q_ROLEs= "q k c v" # [PARALLEL] CPUs roles (q,k,c,v) X_all_q_CPU= "2 2 2 1" # Parallelism over q points only SE_ROLEs= "q qp b" SE_CPU= "2 2 2"

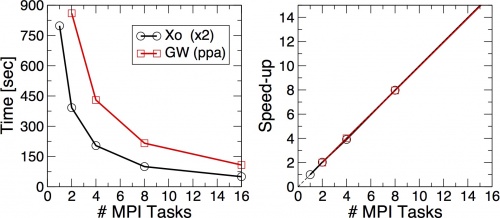

Excercise: MPI scaling 1 to 16 tasks (cores) for parallelism over cvb

Pure MPI scaling of the cvb-parallelism.

Pure MPI scaling of the cvb-parallelism.

[02-01] Pure OpenMP Parallelization (yambo -p p -k Hartree -g n)

To run these examples type

localhost:> export OMP_NUM_THREADS=8 localhost:> yambo -F Inputs/03_QP_PPA_pure-omp -J 03_QP_PPA_pure-omp

At variance with the MPI case, there is no need for a specific launcher (mpiexec, mpirun, ...) to take advantage of OpenMP. The number of threads used can be set through the OMP_NUM_THREADS environment variable as far as the *_threads variables in the input files are set to 0.

When these are set to a value larger than zero, these values overwrite the number provided by OMP_NUM_THREADS. Fine tuning can be obtained by setting these valiables when large number of threads are used, since different runlevels may scale differently wrt the number of OpenMP threads.

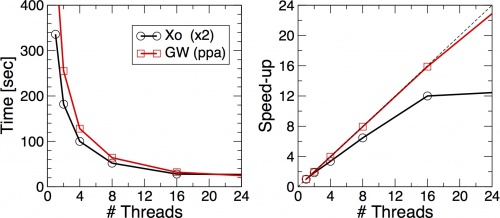

Excercise: OpenMP scaling from 1 to 16 threads

Pure OpenMP scaling. Note that nodes with 16 physical cores have been used.

Pure OpenMP scaling. Note that nodes with 16 physical cores have been used.

As can be seen in the timing results for both X and GW(ppa), pure OpenMP is usually less efficient than pure MPI in terms of time-to-solution. Nevertheless it can be extremely usefull in terms of memory management, since different threads work on the same shared memory (no need for data replication as for MPI). As you will see in the next section, OpenMP can be eploited at best when working together with MPI in order to combine features from both paradigms.

[02-01] Hybrid MPI/OpenMP Parallelization (yambo -p p -k Hartree -g n)

To run these examples type

localhost:> export OMP_NUM_THREADS=2 localhost:> mpiexec -n 4 yambo -F Inputs/04_QP_PPA_hyb-mpi-omp -J 04_QP_PPA_hyb-mpi-omp

The comparison of timing, memory usage, load balancing of this case to the exampels ablve is left as an exercise.

References

TPA on wikipedia. D. Varsano, A. Marini, and A. Rubio Phys. Rev. Lett. 101, 133002 (2008) . E. Cannuccia, A. Marini, Phys. Rev. Lett. 107, 255501 (2011) M. Rohlfing and S. G. Louie, Phys. Rev. Lett. 82, 1959 (1989)